UAVA: A Dataset for UAV Assistant Tasks

Overview

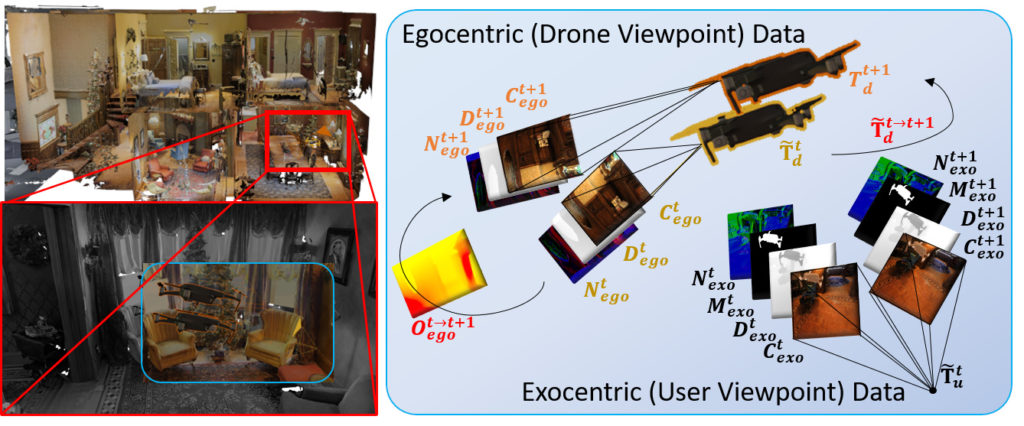

UAVA is specifically designed for fostering applications which consider UAVs and humans as cooperative agents. We employ a real-world 3D scanned dataset (Matterport3D), physically-based rendering, a gamified simulator for realistic drone navigation trajectory collection, to generate realistic mult-imodal data both from the user’s exocentric view of the drone, as well as the drone’s egocentric view.

Motivation

With the advent of low-cost commercial mini-UAVs, new applications and ways of interactions have emerged. However, most of the existing UAV related datasets do not target such applications, prohibiting the development of data-driven methods. We introduce UAVA, a dataset designed for facilitating the data-driven development of such methods. The dataset was created by leveraging an existing photorealistic dataset of indoor scenes, and by following a carefully designed gamification approach.

Trajectories

For the collection of realistic and unbiased trajectories, we developed a game on Unity3D using AirSim. Collectible cube “coins” were placed at each of the known panorama positions (anchors) and players were forced to navigate within the whole scene to collect the coins. We record the drone’s world pose among the scene at each time step t. In the following link we provide the sampled trajectories for each scene as well as the recorded drone pose.

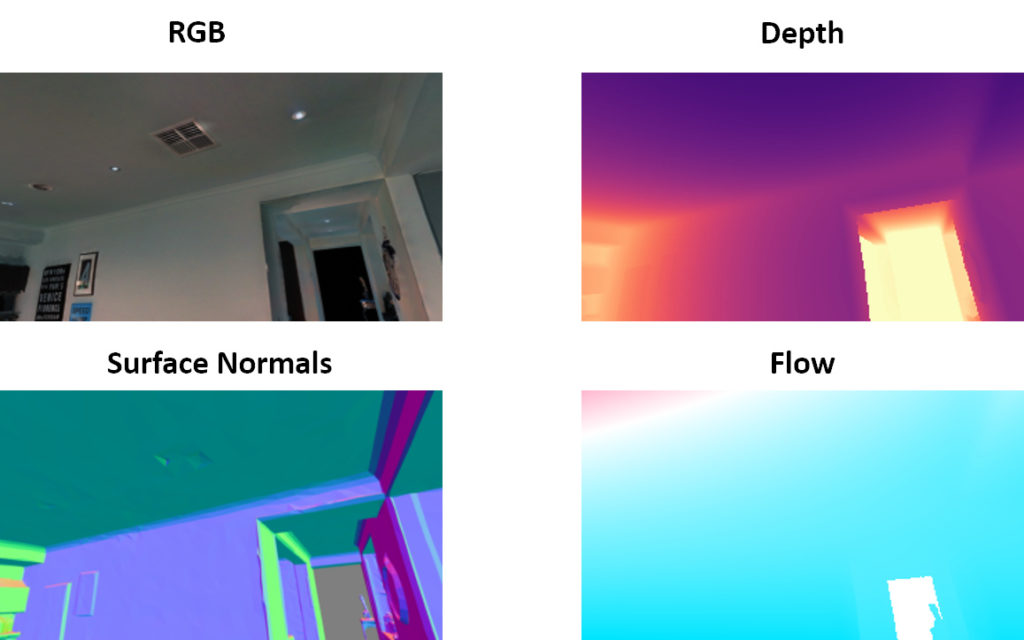

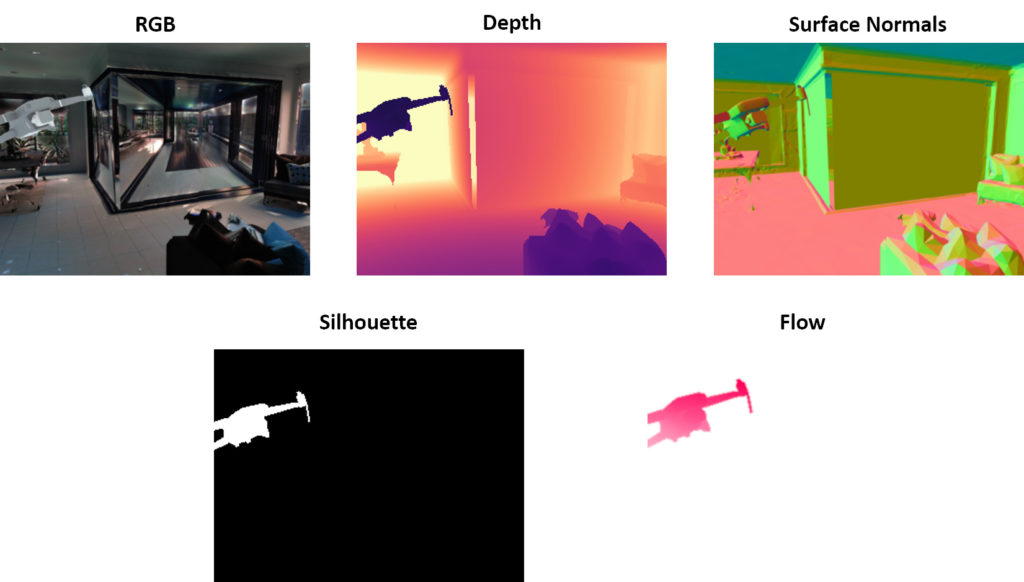

Modalities

We use Blender to synthesize our dataset. For the egocentric “UAV” view we generate color images, depth, and surface maps, in addition to the optical flow for two consecutive frames t, t + 1 sampled from the dense play-through trajectories. For the exocentric “user” view apart from the afforementioned modalities we also utilize a composition pipeline to add a photorealistically shared drone into the scene, and also generate its corresponding silhouette image.

Download

To request access to the dataset, please follow this link.

Samples: